publications

2025

-

Split Gibbs diffusion posterior sampling for nonlinear inverse problems with application to electrical impedance tomographyYuzhe Ling , Xiongwen Ke , Huihui Wang , and Qingping Zhou2025

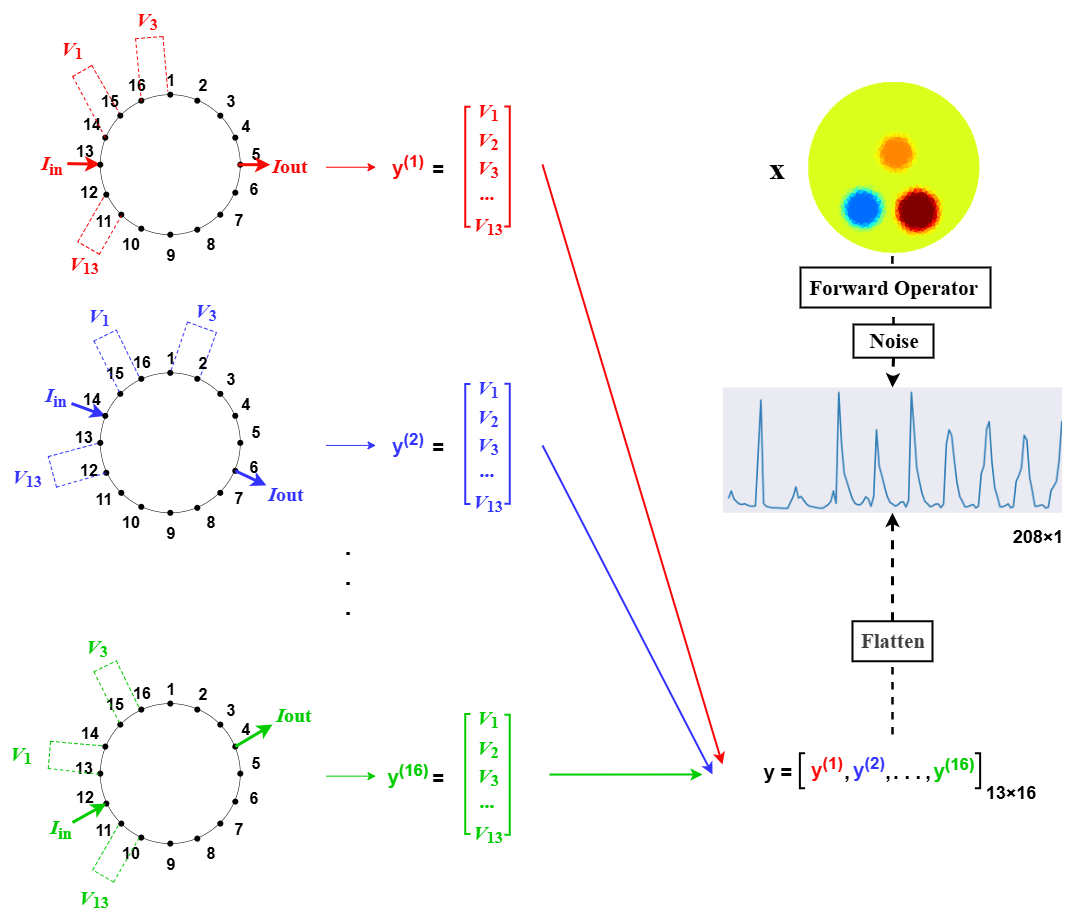

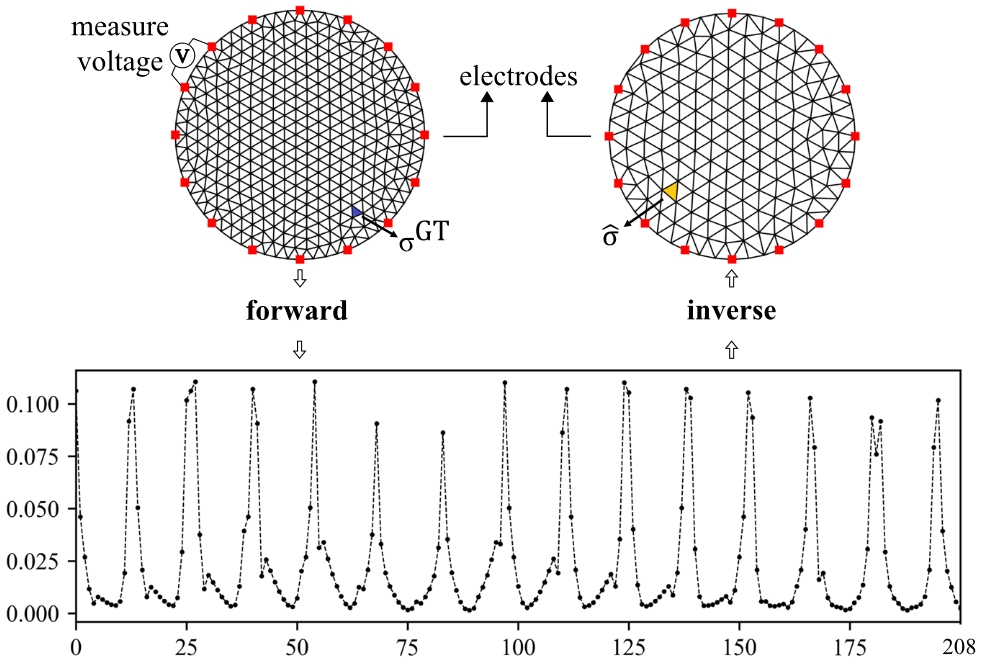

Split Gibbs diffusion posterior sampling for nonlinear inverse problems with application to electrical impedance tomographyYuzhe Ling , Xiongwen Ke , Huihui Wang , and Qingping Zhou2025Diffusion models have become particularly prominent for generating high-quality samples. Consequently, they have become widely adopted for modeling priors in image reconstruction. However, integrating diffusion priors into nonlinear inverse problems presents significant computational challenges, primarily due to the inherent complexity of nonlinear forward operators. This work presents a new approach for incorporating a diffusion prior into the split Gibbs sampler, denoted as DP-SGS, to sample from the posterior distribution in nonlinear settings. DP-SGS simplifies the complex posterior sampling problem by introducing an auxiliary variable that decomposes it into two simple conditional distributions: a forward model that handles the likelihood term and a prior model implicitly determined by the pre-trained diffusion model.Both conditional distributions are efficiently approximated as Gaussian distributions using first-order Taylor expansion for the prior model and Laplace approximation for the forward model, thereby ensuring computational tractability despite the nonlinear forward operator.Experiments on the challenging electrical impedance tomography (EIT) imaging problem demonstrate that our method enhances solution accuracy while achieving rapid convergence rates, confirming its effectiveness for solving nonlinear inverse problems.

-

Sequential Bayesian Design for Efficient Surrogate Construction in the Inversion of Darcy FlowsHongji Wang , Hongqiao Wang , Jinyong Ying , and Qingping Zhou2025

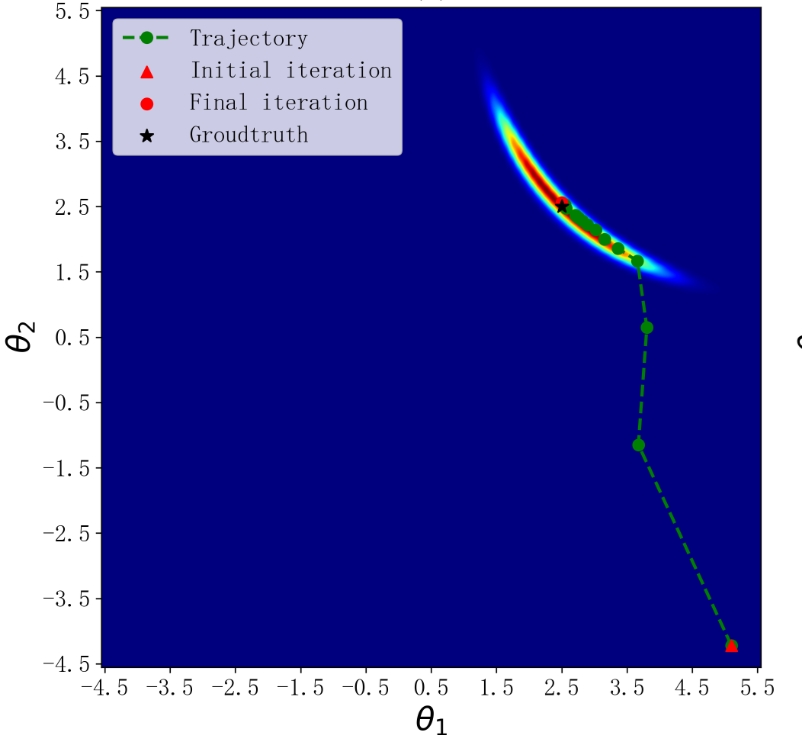

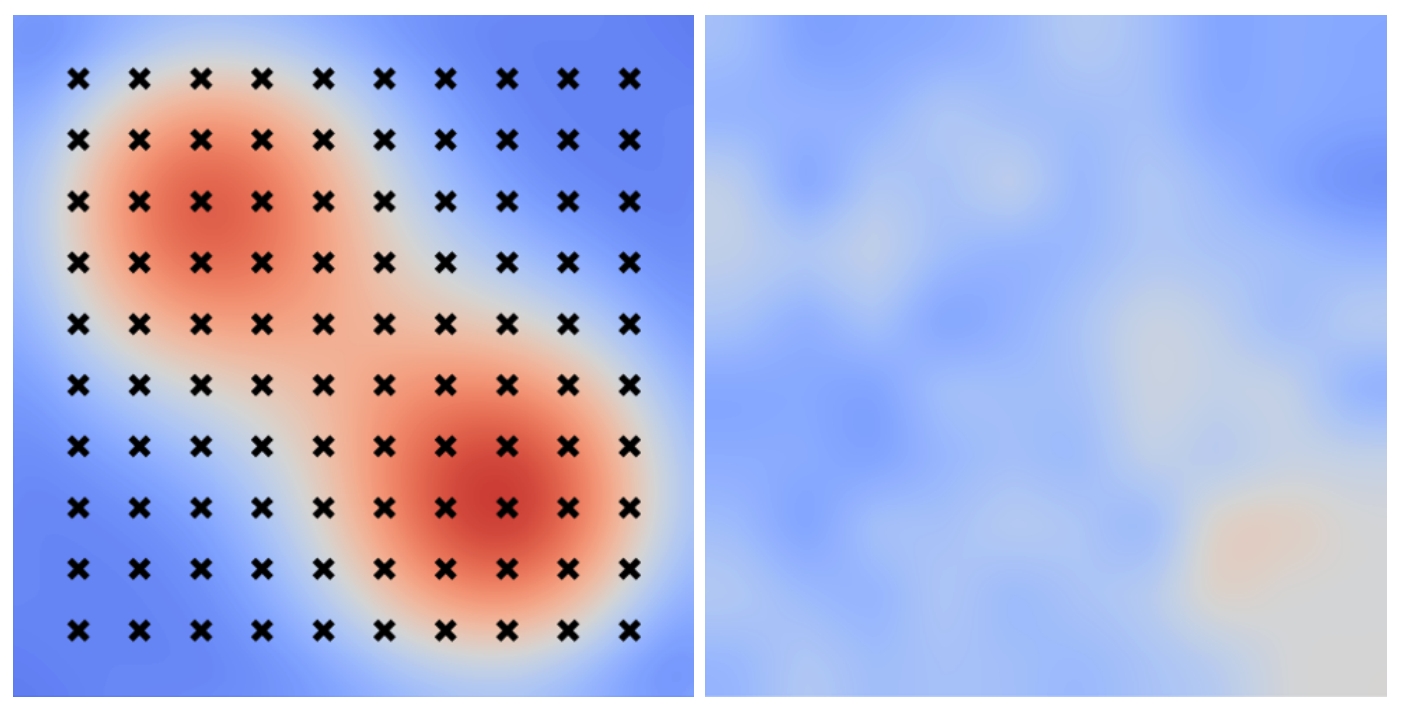

Sequential Bayesian Design for Efficient Surrogate Construction in the Inversion of Darcy FlowsHongji Wang , Hongqiao Wang , Jinyong Ying , and Qingping Zhou2025Inverse problems governed by partial differential equations (PDEs) play a crucial role in various fields, including computational science, image processing, and engineering. Particularly, Darcy flow equation is a fundamental equation in fluid mechanics, which plays a crucial role in understanding fluid flow through porous media. Bayesian methods provide an effective approach for solving PDEs inverse problems, while their numerical implementation requires numerous evaluations of computationally expensive forward solvers. Therefore, the adoption of surrogate models with lower computational costs is essential. However, constructing a globally accurate surrogate model for high-dimensional complex problems demands high model capacity and large amounts of data. To address this challenge, this study proposes an efficient locally accurate surrogate that focuses on the high-probability regions of the true likelihood in inverse problems, with relatively low model complexity and few training data requirements. Additionally, we introduce a sequential Bayesian design strategy to acquire the proposed surrogate since the high-probability region of the likelihood is unknown. The strategy treats the posterior evolution process of sequential Bayesian design as a Gaussian process, enabling algorithmic acceleration through one-step ahead prior. The complete algorithmic framework is referred to as Sequential Bayesian design for locally accurate surrogate (SBD-LAS). Finally, three experiments based the Darcy flow equation demonstrate the advantages of the proposed method in terms of both inversion accuracy and computational speed.

2024

-

Fused L1/2 prior for large scale linear inverse problem with Gibbs bouncy particle samplerXiongwen Ke , Yanan Fan , and Qingping Zhou2024

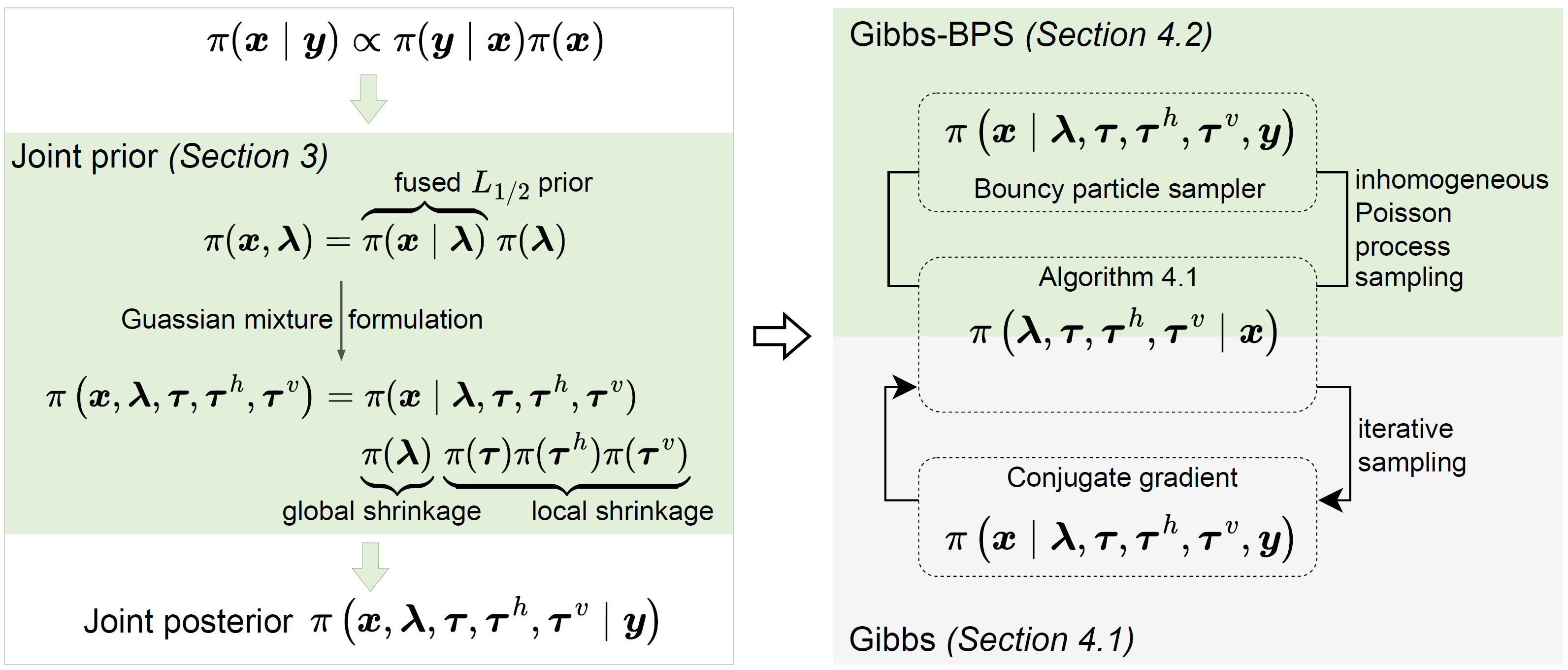

Fused L1/2 prior for large scale linear inverse problem with Gibbs bouncy particle samplerXiongwen Ke , Yanan Fan , and Qingping Zhou2024In this paper, we study Bayesian approach for solving large scale linear inverse problems arising in various scientific and engineering fields. We propose a fused L1/2 prior with edge-preserving and sparsity-promoting properties and show that it can be formulated as a Gaussian mixture Markov random field. Since the density function of this family of prior is neither log-concave nor Lipschitz, gradient-based Markov chain Monte Carlo methods can not be applied to sample the posterior. Thus, we present a Gibbs sampler in which all the conditional posteriors involved have closed form expressions. The Gibbs sampler works well for small size problems but it is computationally intractable for large scale problems due to the need for sample high dimensional Gaussian distribution. To reduce the computation burden, we construct a Gibbs bouncy particle sampler (Gibbs-BPS) based on a piecewise deterministic Markov process. This new sampler combines elements of Gibbs sampler with bouncy particle sampler and its computation complexity is an order of magnitude smaller. We show that the new sampler converges to the target distribution. With computed tomography examples, we demonstrate that the proposed method shows competitive performance with existing popular Bayesian methods and is highly efficient in large scale problems.

-

Deep unrolling networks with recurrent momentum acceleration for nonlinear inverse problemsQingping Zhou, Jiayu Qian , Junqi Tang , and Jinglai LiInverse Problems, 2024

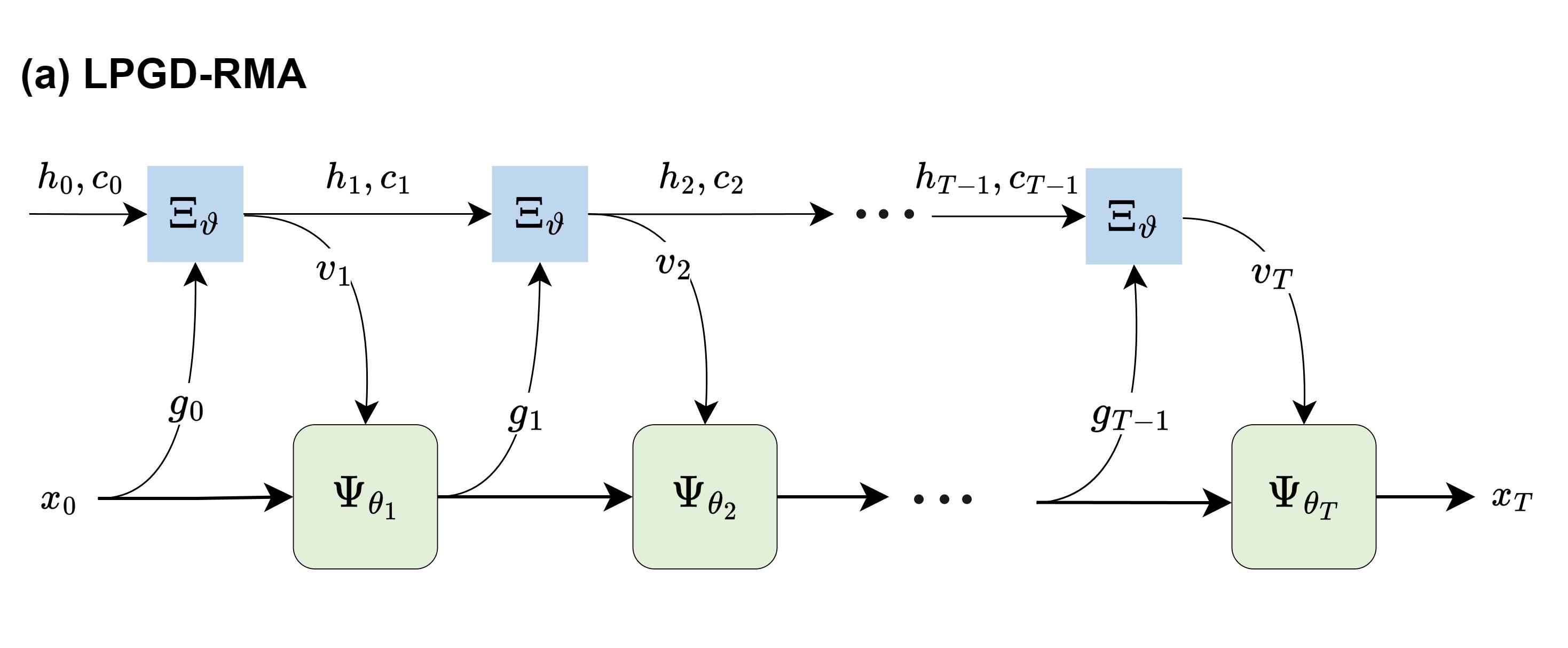

Deep unrolling networks with recurrent momentum acceleration for nonlinear inverse problemsQingping Zhou, Jiayu Qian , Junqi Tang , and Jinglai LiInverse Problems, 2024Combining the strengths of model-based iterative algorithms and data-driven deep learning solutions, deep unrolling networks (DuNets) have become a popular tool to solve inverse imaging problems. Although DuNets have been successfully applied to many linear inverse problems, their performance tends to be impaired by nonlinear problems. Inspired by momentum acceleration techniques that are often used in optimization algorithms, we propose a recurrent momentum acceleration (RMA) framework that uses a long short-term memory recurrent neural network (LSTM-RNN) to simulate the momentum acceleration process. The RMA module leverages the ability of the LSTM-RNN to learn and retain knowledge from the previous gradients. We apply RMA to two popular DuNets – the learned proximal gradient descent (LPGD) and the learned primal-dual (LPD) methods, resulting in LPGD-RMA and LPD-RMA, respectively. We provide experimental results on two nonlinear inverse problems: a nonlinear deconvolution problem, and an electrical impedance tomography problem with limited boundary measurements. In the first experiment we have observed that the improvement due to RMA largely increases with respect to the nonlinearity of the problem. The results of the second example further demonstrate that the RMA schemes can significantly improve the performance of DuNets in strongly ill-posed problems.

-

A comparative study of variational autoencoders, normalizing flows, and score-based diffusion models for electrical impedance tomographyHuihui Wang , Guixian Xu , and Qingping ZhouJournal of Inverse and Ill-posed Problems, 2024

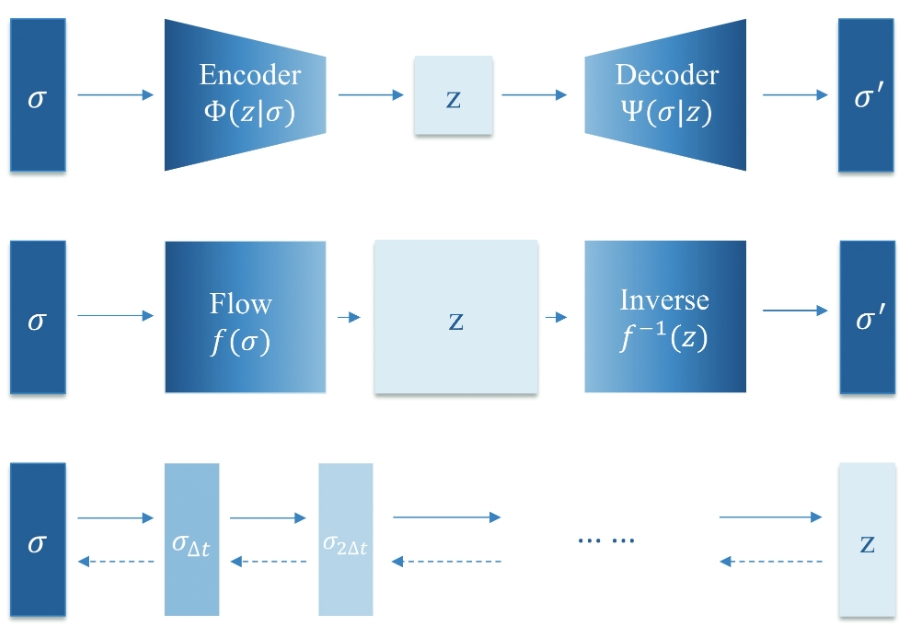

A comparative study of variational autoencoders, normalizing flows, and score-based diffusion models for electrical impedance tomographyHuihui Wang , Guixian Xu , and Qingping ZhouJournal of Inverse and Ill-posed Problems, 2024Electrical Impedance Tomography (EIT) is a widely employed imaging technique in industrial inspection, geophysical prospecting, and medical imaging. However, the inherent nonlinearity and ill-posedness of EIT image reconstruction present challenges for classical regularization techniques, such as the critical selection of regularization terms and the lack of prior knowledge. Deep generative models (DGMs) have been shown to play a crucial role in learning implicit regularizers and prior knowledge. This study aims to investigate the potential of three DGMs – variational autoencoder networks, normalizing flow, and score-based diffusion model – to learn implicit regularizers in learning-based EIT imaging. We first introduce background information on EIT imaging and its inverse problem formulation. Next, we propose three algorithms for performing EIT inverse problems based on corresponding DGMs. Finally, we present numerical and visual experiments, which reveal that (1) no single method consistently outperforms the others across all settings, and (2) when reconstructing an object with two anomalies using a well-trained model based on a training dataset containing four anomalies, the conditional normalizing flow (CNF) model exhibits the best generalization in low-level noise, while the conditional score-based diffusion model (CSD*) demonstrates the best generalization in high-level noise settings. We hope our preliminary efforts will encourage other researchers to assess their DGMs in EIT and other nonlinear inverse problems.

-

A MCMC Method Based on Surrogate Model and Gaussian Process Parameterization for Infinite Bayesian PDE InversionZheng Hu , Hongqiao Wang , and Qingping ZhouJournal of Computational Physics, 2024

A MCMC Method Based on Surrogate Model and Gaussian Process Parameterization for Infinite Bayesian PDE InversionZheng Hu , Hongqiao Wang , and Qingping ZhouJournal of Computational Physics, 2024This work focuses on an inversion problem derived from parametric partial differential equations (PDEs) with an infinite-dimensional parameter, represented as a coefficient function. The objective is to estimate this coefficient function accurately despite having only noisy measurements of the PDE solution at sparse input points. Conventional methods for inversion require numerous calls to a refined PDE solver, resulting in significant computational complexity, especially for challenging PDE problems, making the inference of the coefficient function practically infeasible. To address this issue, we propose a MCMC method that combines an deep learning-based surrogate and Gaussian process parameterization to efficiently infer the posterior of the coefficient function. The surrogate model is a combination of a cost-effective coarse PDE solver and a neural network-based transformation which provides an approximate solution derived from the refined PDE solver but based on the coarse PDE solution. The coefficient function is represented by Gaussian process with finite number of spatially dependent parameters and this parametric representation will be beneficial for the preconditioned Crank-Nicolson (pCN) Markov chain Monte Carlo method to sample efficiently from the posterior distribution. Approximate Bayesian computation method is used for constructing an informative dataset for the transformation learning. Our numerical examples demonstrate the effectiveness of this approach in accurately estimating the coefficient function while significantly reducing the computational burden associated with traditional inversion techniques.

-

Enhancing electrical impedance tomography reconstruction using learned half-quadratic splitting networks with Anderson accelerationGuixian Xu , Huihui Wang , and Qingping ZhouJournal of Scientific Computing, 2024

Enhancing electrical impedance tomography reconstruction using learned half-quadratic splitting networks with Anderson accelerationGuixian Xu , Huihui Wang , and Qingping ZhouJournal of Scientific Computing, 2024Electrical Impedance Tomography (EIT) is widely applied in medical diagnosis, industrial inspection, and environmental monitoring. Combining the physical principles of the imaging system with the advantages of data-driven deep learning networks, physics-embedded deep unrolling networks have recently emerged as a promising solution in computational imaging. However, the inherent nonlinear and ill-posed properties of EIT image reconstruction still present challenges to existing methods in terms of accuracy and stability. To tackle this challenge, we propose the learned half-quadratic splitting (HQSNet) algorithm for incorporating physics into learning-based EIT imaging. We then apply Anderson acceleration (AA) to the HQSNet algorithm, denoted as AA-HQSNet, which can be interpreted as AA applied to the Gauss-Newton step and the learned proximal gradient descent step of the HQSNet, respectively. AA is a widely-used technique for accelerating the convergence of fixed-point iterative algorithms and has gained significant interest in numerical optimization and machine learning. However, the technique has received little attention in the inverse problems community thus far. Employing AA enhances the convergence rate compared to the standard HQSNet while simultaneously avoiding artifacts in the reconstructions. Lastly, we conduct rigorous numerical and visual experiments to show that the AA module strengthens the HQSNet, leading to robust, accurate, and considerably superior reconstructions compared to state-of-the-art methods. Our Anderson acceleration scheme to enhance HQSNet is generic and can be applied to improve the performance of various physics-embedded deep learning methods.

2023

-

An Uncertainty-Guided Deep Learning Method Facilitates Rapid Screening of CYP3A4 InhibitorsRuixuan Wang , Zhikang Liu , Jiahao Gong , Qingping Zhou, Xiaoqing Guan , and Guangbo GeJournal of Chemical Information and Modeling, 2023

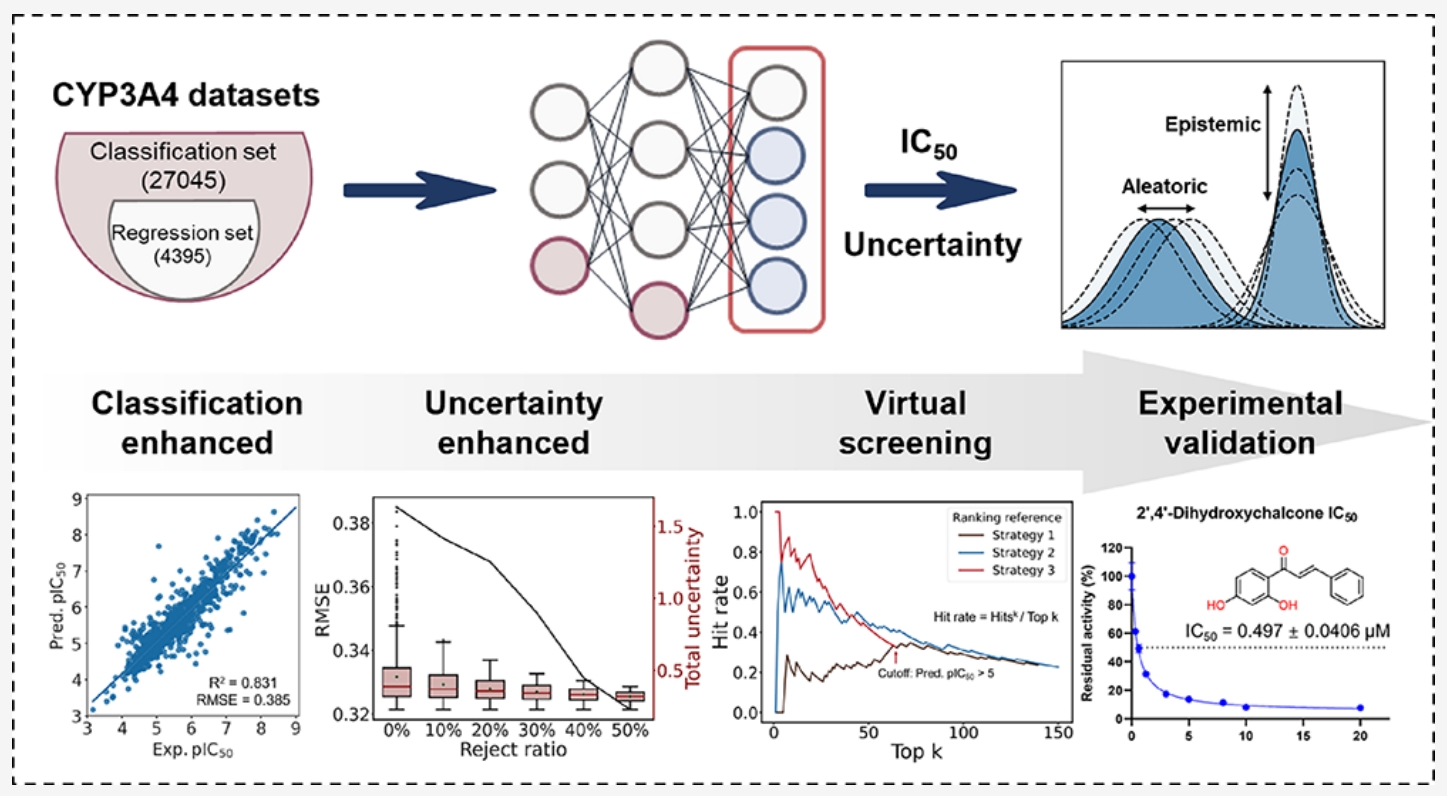

An Uncertainty-Guided Deep Learning Method Facilitates Rapid Screening of CYP3A4 InhibitorsRuixuan Wang , Zhikang Liu , Jiahao Gong , Qingping Zhou, Xiaoqing Guan , and Guangbo GeJournal of Chemical Information and Modeling, 2023Cytochrome P450 3A4 (CYP3A4), a prominent member of the P450 enzyme superfamily, plays a crucial role in metabolizing various xenobiotics, including over 50% of clinically significant drugs. Evaluating CYP3A4 inhibition before drug approval is essential to avoiding potentially harmful pharmacokinetic drug−drug interactions (DDIs) and adverse drug reactions (ADRs). Despite the development of several CYP inhibitor prediction models, the primary approach for screening CYP inhibitors still relies on experimental methods. This might stem from the limitations of existing models, which only provide deterministic classification outcomes instead of precise inhibition intensity (e.g., IC50) and often suffer from inadequate prediction reliability. To address this challenge, we propose an uncertaintyguided regression model to accurately predict the IC50 values of anti-CYP3A4 activities. First, a comprehensive data set of CYP3A4 inhibitors was compiled, consisting of 27,045 compounds with classification labels, including 4395 compounds with explicit IC50 values. Second, by integrating the predictions of the classification model trained on a larger data set and introducing an evidential uncertainty method to rank prediction confidence, we obtained a high-precision and reliable regression model. Finally, we use the evidential uncertainty values as a trustworthy indicator to perform a virtual screening of an in-house compound set. The in vitro experiment results revealed that this new indicator significantly improved the hit ratio and reduced false positives among the topranked compounds. Specifically, among the top 20 compounds ranked with uncertainty, 15 compounds were identified as novel CYP3A4 inhibitors, and three of them exhibited activities less than 1 μM. In summary, our findings highlight the effectiveness of incorporating uncertainty in compound screening, providing a promising strategy for drug discovery and development.

2020

-

Bayesian Inference and Uncertainty Quantification for Medical Image Reconstruction with Poisson DataQingping Zhou, Tengchao Yu , Xiaoqun Zhang , and Jinglai LiSIAM Journal on Imaging Sciences, 2020

Bayesian Inference and Uncertainty Quantification for Medical Image Reconstruction with Poisson DataQingping Zhou, Tengchao Yu , Xiaoqun Zhang , and Jinglai LiSIAM Journal on Imaging Sciences, 2020We provide a complete framework for performing infinite dimensional Bayesian inference and uncertainty quantification for image reconstruction with Poisson data. In particular, we address the following issues to make the Bayesian framework applicable in practice. We first introduce a positivity-preserving reparametrization, and we prove that under the reparametrization and a hybrid prior, the posterior distribution is well-posed in the infinite dimensional setting. Second, we provide a dimension-independent Markov chain Monte Carlo algorithm, based on the preconditioned Crank–Nicolson Langevin method, in which we use a primal-dual scheme to compute the offset direction. Third, we give a method combining the model discrepancy method and maximum likelihood estimation to determine the regularization parameter in the hybrid prior. Finally we propose to use the obtained posterior distribution to detect artifacts in a recovered image. We provide an example to demonstrate the effectiveness of the proposed method.

-

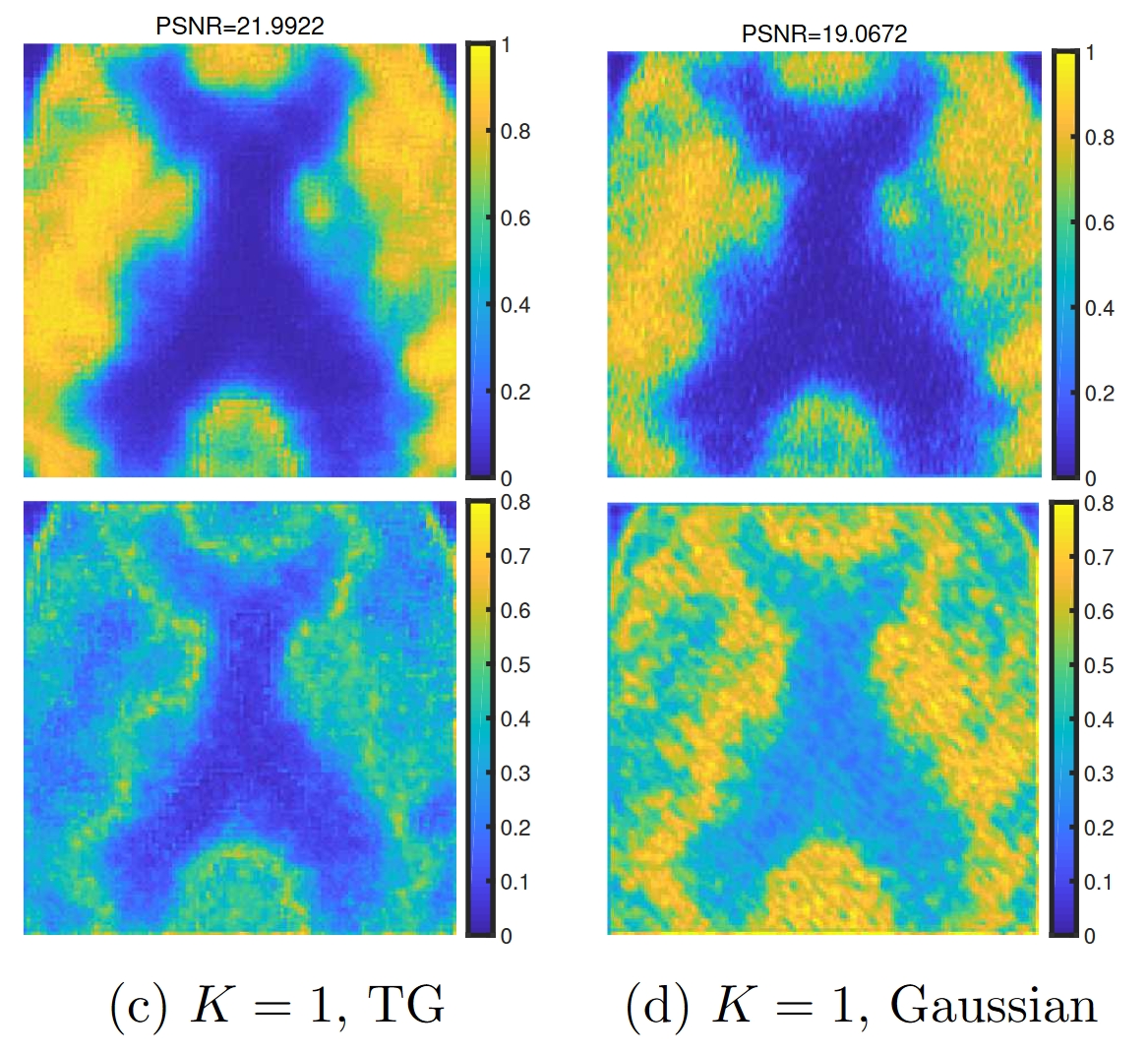

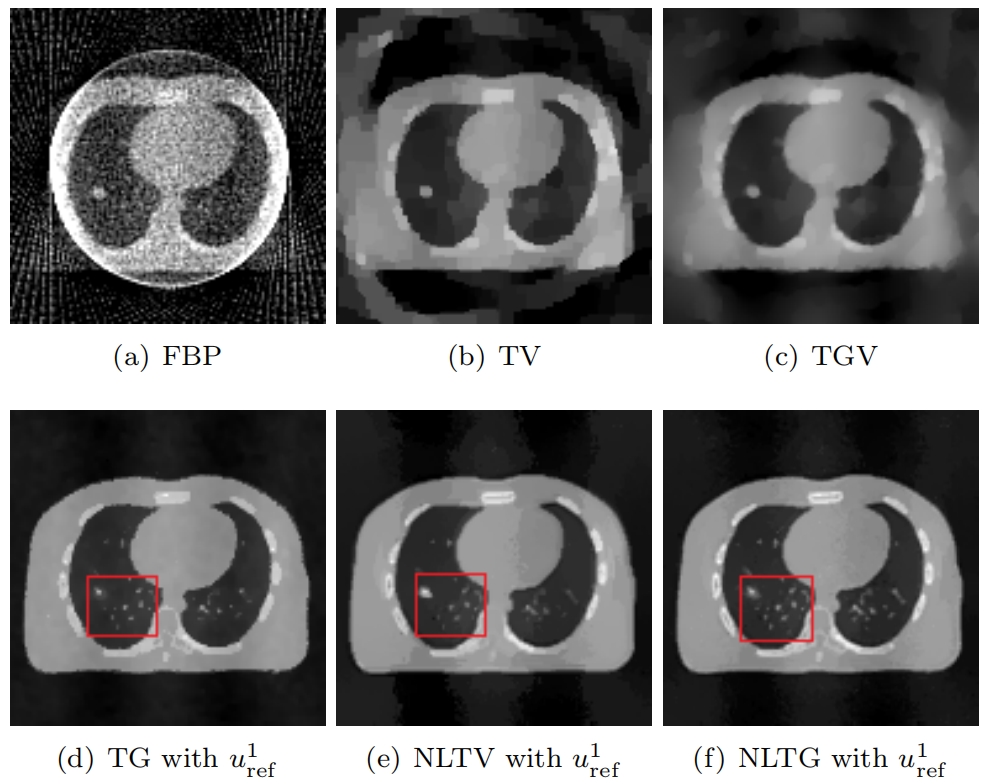

Nonlocal TV-Gaussian prior for Bayesian inverse problems with applications to limited CT reconstructionDidi Lv , Qingping Zhou, Jae Kyu Choi , Jinglai Li , and Xiaoqun ZhangInverse Problems & Imaging, 2020

Nonlocal TV-Gaussian prior for Bayesian inverse problems with applications to limited CT reconstructionDidi Lv , Qingping Zhou, Jae Kyu Choi , Jinglai Li , and Xiaoqun ZhangInverse Problems & Imaging, 2020Bayesian inference methods have been widely applied in inverse problems due to the ability of uncertainty characterization of the estimation. The prior distribution of the unknown plays an essential role in the Bayesian inference, and a good prior distribution can significantly improve the inference results. In this paper, we propose a hybrid prior distribution on combining the nonlocal total variation regularization (NLTV) and the Gaussian distribution, namely NLTG prior. The advantage of this hybrid prior is two-fold. The proposed prior models both texture and geometric structures present in images through the NLTV. The Gaussian reference measure also provides a flexibility of incorporating structure information from a reference image. Some theoretical properties are established for the hybrid prior. We apply the proposed prior to limited tomography reconstruction problem that is difficult due to severe data missing. Both maximum a posteriori and conditional mean estimates are computed through two efficient methods and the numerical experiments validate the advantages and feasibility of the proposed NLTG prior.

2018

-

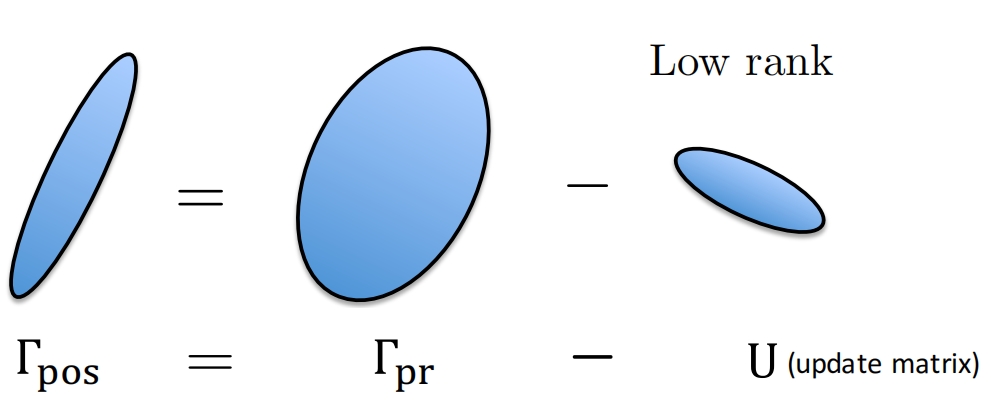

An approximate empirical Bayesian method for large-scale linear-Gaussian inverse problemsQingping Zhou, Wenqing Liu , Jinglai Li , and Youssef M MarzoukInverse Problems, Jun 2018

An approximate empirical Bayesian method for large-scale linear-Gaussian inverse problemsQingping Zhou, Wenqing Liu , Jinglai Li , and Youssef M MarzoukInverse Problems, Jun 2018We study Bayesian inference methods for solving linear inverse problems, focusing on hierarchical formulations where the prior or the likelihood function depend on unspecified hyperparameters. In practice, these hyperparameters are often determined via an empirical Bayesian method that maximizes the marginal likelihood function, i.e. the probability density of the data conditional on the hyperparameters. Evaluating the marginal likelihood, however, is computationally challenging for large-scale problems. In this work, we present a method to approximately evaluate marginal likelihood functions, based on a low-rank approximation of the update from the prior covariance to the posterior covariance. We show that this approximation is optimal in a minimax sense. Moreover, we provide an efficient algorithm to implement the proposed method, based on a combination of the randomized SVD and a spectral approximation method to compute square roots of the prior covariance matrix. Several numerical examples demonstrate good performance of the proposed method.

2017

-

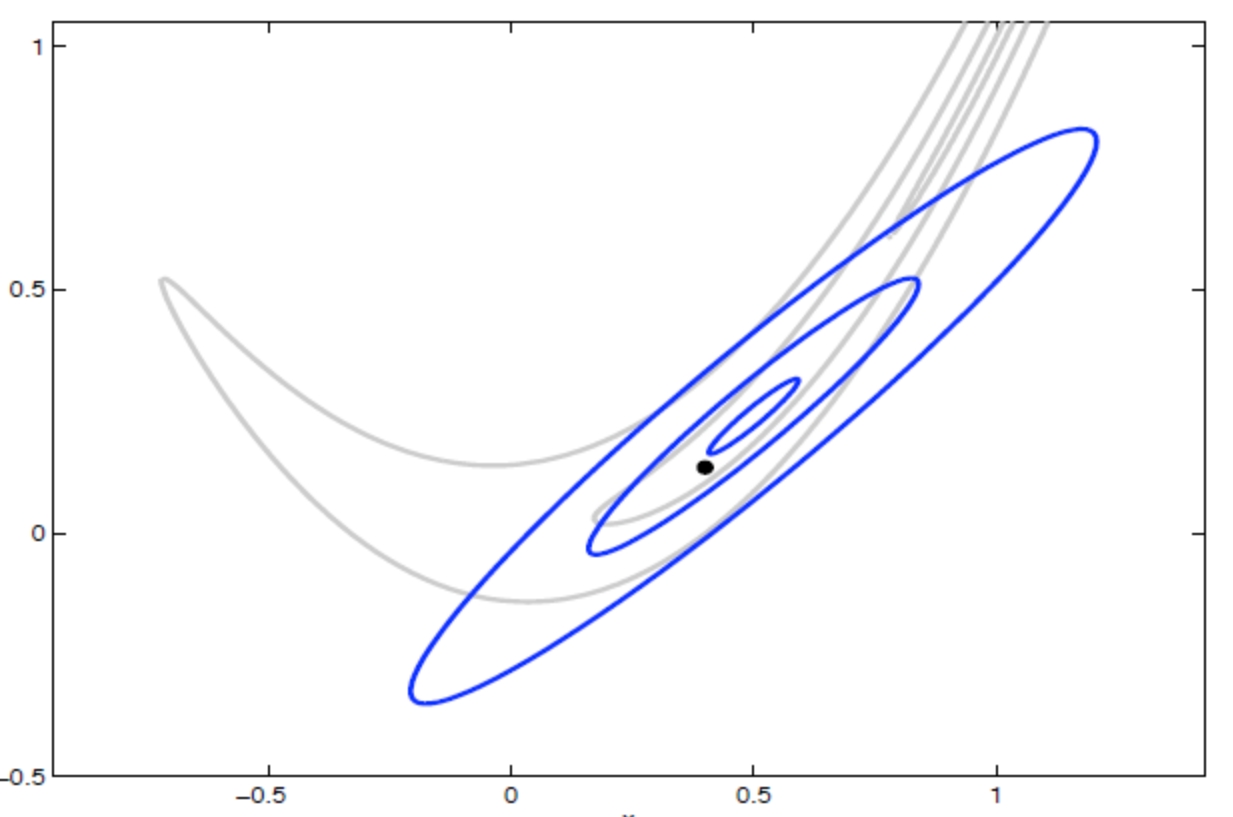

A Hybrid Adaptive MCMC Algorithm in Function SpacesQingping Zhou, Zixi Hu , Zhewei Yao , and Jinglai LiSIAM/ASA Journal on Uncertainty Quantification, Jun 2017

A Hybrid Adaptive MCMC Algorithm in Function SpacesQingping Zhou, Zixi Hu , Zhewei Yao , and Jinglai LiSIAM/ASA Journal on Uncertainty Quantification, Jun 2017The preconditioned Crank–Nicolson (pCN) method is a Markov chain Monte Carlo (MCMC) scheme, specifically designed to perform Bayesian inferences in function spaces. Unlike many standard MCMC algorithms, the pCN method can preserve the sampling efficiency under the mesh refinement, a property referred to as being dimension independent. In this work we consider an adaptive strategy to further improve the efficiency of pCN. In particular we develop a hybrid adaptive MCMC method: the algorithm performs an adaptive Metropolis scheme in a chosen finite dimensional subspace and a standard pCN algorithm in the complement space of the chosen subspace. We show that the proposed algorithm satisfies certain important ergodicity conditions. Finally with numerical examples we demonstrate that the proposed method has competitive performance with existing adaptive algorithms.